Patch & Release! Part 1

In this two-part post, we address the confusion of how software vendors develop and release approved patches (part 1) and provide our recommendations for how to most effectively test patches prior to deployment (part 2).

2017-18 — A Big Year for Patching

By now you’ve heard of the Meltdown and Spectre vulnerabilities that were reported all over the news in January 2018. The vulnerabilities impacted almost any system with a chip produced by Intel, ARM and AMD, which is to say, just about every machine with a central processing unit (CPU). As you’ve likely noticed at your own workplace during the past month, companies have been in a patch-testing frenzy as they wonder whether to apply vendor-released patches amid fears over negative system impact. Unfortunately, these fears have been validated by reports of computers “spontaneously rebooting” or not even starting after the patch installs. As recently as last month, Intel’s Navin Shenoy made a statement that has called into question, in the eyes of some, the very worth of patching itself: “We recommend that OEMs, cloud service providers, system manufacturers, software vendors and end users stop deployment of current versions, as they may introduce higher than expected reboots and other unpredictable system behavior.”

Safe to say, there has been much confusion among IT and business leaders regarding the patch release process. Some software vendors feel rushed to push patches as quickly as possible in order to reduce the likelihood of attack and to prevent public criticism; other vendors prefer that patch releases allow sufficient time for testing and refinement, particularly when the systems involved are of a critical nature (e.g. medical equipment). As one CEO rightly notes, “Everybody is saying ‘we’re not affected’ or ‘hey, we released patches,’ and it has been really confusing…you can’t bring down a power grid just to try out a patch.”

So what is a company to do — apply new patches as quickly as possible in order to prevent against possible threats, or test extensively even if patches have to be applied later than recommended from a security standpoint? And how can software owners get more visibility into their vendors’ patch release process? To answer these questions, we will look behind the curtain of the patch release process and revisit some of Apple’s and Microsoft’s patch releases from the past six months.

Understanding the Patch Release Process

At the highest level, the overall patch process for a software vendor is as follows: (1) a software vendor learns that part of a program is not working as intended (a bug) or identifies an attack possibility (a vulnerability); (2) the software vendor develops a patch to eliminate the bug or vulnerability; (3) the software vendor releases the patch through the internet and notifies their customers; and (4) customers are left to test and implement the patch for end users.

We should note that pirated copies of software cannot receive updates directly from the vendor.

Breaking the news — how the public learns about software vulnerabilities

How a vulnerability is identified brings additional complexity to the patch process for a software vendor. In short, public awareness of a vulnerability generally happens one of three ways:

- Identified by Vendor or Threat Hunter: Vendors may identify a vulnerability internally or an ethical threat hunter notifies the vendor through a bug bounty program. When the patch is released, the public is made aware of the vulnerability and patch availability at the same time.

- Identified by Criminal: A criminal exploits the vulnerability and reports of exploits hit the news. In this case, the vendor has to develop the patch while the exploits are already occurring.

- Identified by a Third Party: Security researchers, third party users, and third party developers can also identify vulnerabilities. What’s critical in this case is how the vulnerability is reported — if it’s reported directly to the software vendor by the third party or to an official agency like US-CERT, then the release pattern will be very similar to #1 above. However, if the threat is reported via other channels, such as Twitter, the release process ends up being very similar to #2 above.

In any of the above scenarios, once the public is aware of a vulnerability, so are the criminals. Hence, as soon as the public learns of of a vulnerability, software vendors are against the clock to develop and test their patch before the vulnerability can be exploited.

Beating the Vulnerability Countdown Clock: Timeline of Ethically-Identified Patch Releases

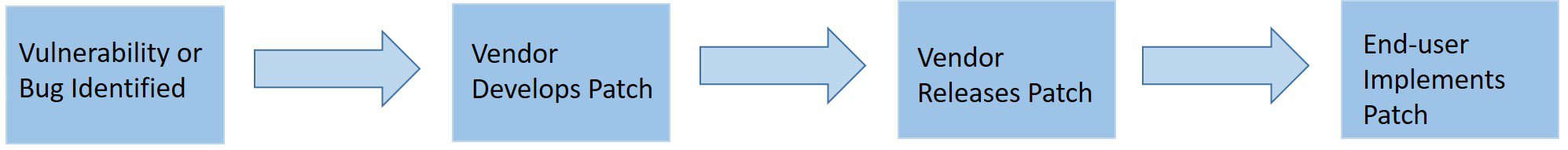

With large enough data sets, it’s possible to develop a reasonable assessment of how the length of the ‘vulnerability to patch timeline’ can affect the probability of a vulnerability actually being exploited. A study conducted by Kenna Security in 2015 estimates the probability of a vulnerability being exploited between 40 – 60 days after discovery hits an inflection point at 90 percent.

Ethical Vendor Identification Timeline

The below timeline identifies the basic patch procedure timing, from ethical vendor identification through the earliest 90% exploitation point of 40 days, as suggested by the Kenna Security study. For a compliance risk perspective, we’ve marked a requirement from the Payment Card Industry Data Security Standards (PCI DSS) that mandates patches to be applied within one month of release.

This timeline includes the following assumptions:

- Ethically identified vulnerabilities are not leaked prior to patch release.

- The vendor promptly develops the patch once identification occurs and completes development and releases the patches at 30 days. Actual patch development times may vary of course.

- From patch release to implementation, testing is successful

- Automated patch implementation (with or without testing) occurs within 24 hours.

As you can see from the timeline, there is a possibility that if all goes well during the patch development and application process, the patch could get installed before probability of exploitation reaches the 90% mark. However, the windows are still tight even during the smoothest patch release, and there is no guarantee that the exploits won’t happen at a lower percentage of probability than 90%.

Case studies: Patches Released by Microsoft for Ethically-identified Vulnerabilities

WannaCry Patch: released by Microsoft on March 14, 2017

A good example of the ethical patch window timeline played out is the WannaCry attack. On March 14, 2017 Microsoft issued security bulletin MS17-010 and released the patches for their products. Two months later, on May 12, 2017, WannaCry began spreading via the EternalBlue vulnerability and within one day had infected over 230,000 computers in over 150 countries. In the WannaCry example, it took two months for the exploit to occur post-patch release. So why was it successful? Two reasons: not all systems were patched and many legacy systems were past end-of-life and no longer receiving patches from the vendor. This event emphasizes how vulnerabilities, particularly those that are easily exploited, can impact systems on a wide scale soon after a vendor makes them public even for attacks that are non-targeted.

Equation Editor Executable Patch: released by Microsoft on November 14 — three months after vulnerability identification

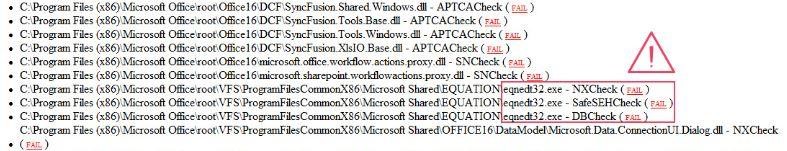

A more recent example of an ethically identified vulnerability with a Microsoft patch is the Equation Editor Executable patch (CVE-2017-11882), a fix for a buffer overflow vulnerability in the old Equation Editor EQNEDT32.exe.

Although this Equation Editor has been replaced by an integrated Equation Editor since 2007, the old Equation Editor is still launched for older documents. This vulnerability was identified by an ethical source, Embedi, and reported to Microsoft on 8/3/2017; Microsoft acknowledged receipt of the report on 8/4/2017.

In the screenshot below, Embedi noted that Binscope marked EQNEDT32.EXE as an unsafe component.

Microsoft was notified of the vulnerability on August 14, 2017 and the patch for CVE-2017-11882 was released on the November 14, 2017 “Patch Tuesday,” thereby extending the pre-release window to 3 months. To be clear, as long as the vulnerability is not leaked or found by a criminal prior to the patch release, risk is measured only after the release of the patch. Following the patch release, risk of exploitation increases with time, and responsibility for the patch shifts from the vendor to the software owner/user.

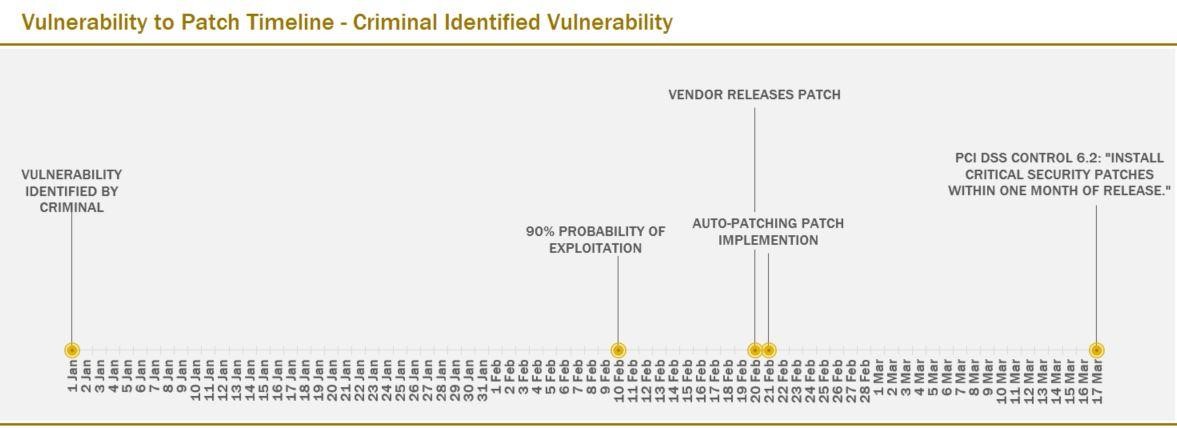

Beating the Vulnerability Countdown Clock: Criminal-identified Vulnerability Timeline

Now let’s explore what the timeline looks like when a criminal identifies the vulnerability first (also known as a zero-day vulnerability):

This timeline includes the following assumptions:

- The exploitation is not stealth and public notification occurs promptly post exploitation.

- The vendor promptly develops the patch once exploitation occurs and completes development and releases the patches at 10 days. Actual patch development times may vary of course.

- From patch release to implementation, testing is successful.

- Automated patch implementation (with or without testing) occurs within 24 hours.

If the WannaCry attack had occurred with this timeline model and not been stopped via a web domain takeover, a much greater number of computers could have been infected. As you can see above, in the criminal-identified vulnerability model, the exploitation has a larger window to spread prior to the patch release. Below are a few case studies to better understand how this increased exploitation window impacts the vendors’ patch release process.

macOS High Sierra Vulnerability: third-party developer-identified vulnerability disclosed via Twitter on November 28, 2017

Even macOS software owners have to pay close attention to their vendors’ patch release process. Just last November, a vulnerability involving the “Direct Utility” component of macOS High Sierra was discovered that “allowed anyone with physical access to a Mac to gain system administrator access” without requiring a password. The public was made aware of the vulnerability by developer Lemi Orhan Ergin via Twitter:

Dear @AppleSupport, we noticed a *HUGE* security issue at MacOS High Sierra. Anyone can login as “root” with empty password after clicking on login button several times. Are you aware of it @Apple?

— Lemi Orhan Ergin (@lemiorhan) November 28, 2017

From Apple’s perspective, the High Sierra patch release process was the same as it would have been for a criminal-identified vulnerability: they learned about the vulnerability along with everyone else. For scenarios where the software vendor learns of a vulnerability publicly, they will often provide a workaround to protect a system until the patch can be released (such as adding a filter to block the vulnerability). Following this pattern, Apple published step-by-step instructions to help their customers protect their machines in the short term on the same day that the vulnerability was publicized. However, as a result of the scramble to push a software patch for macOS High Sierra, Apple’s own fix began experiencing glitches. Users reported that the macOS High Sierra upgrades (from 10.13.0 to 10.13.1) restored the vulnerability and that the vulnerability remained unless machines were rebooted. Moreover, Apple’s first release broke file-sharing functions on High Sierra, bringing into question Apple’s overall quality control. Apple eventually came out with additional patches without the bugs, thereby forcing software owners to remove and apply an additional set of patches to their systems.

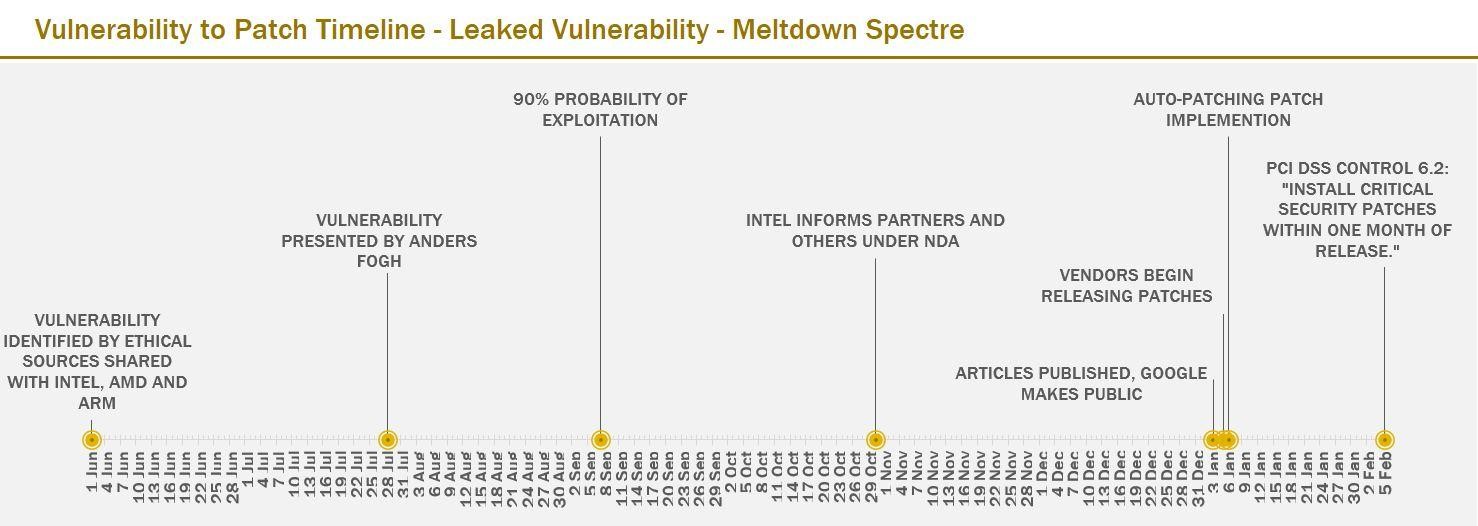

Meltdown/Spectre — a Hybrid Vulnerability Timeline

The recent Meltdown and Spectre set of vulnerabilities had what we call a hybrid timeline because they were originally identified ethically and reported to the vendors. Unfortunately, the vulnerabilities were then leaked in advance of the vendors releasing the patches. The result was that the timeline morphed from ethically-identified into the criminally-identified timeline, described below, beginning with ethical sources notifying chip vendors Intel, AMD and ARM.

There are some interesting items of note here. If the criminals began working on their exploits when Anders Fogh blogged about the vulnerability in July 2017, the 90% probability of exploitation would have been September 7th 2018, as shown. Unfortunately, It wasn’t until Google made the vulnerabilities public on January 3rd that software vendors raised the priority and began releasing patches on January 5th. If criminals did not begin working on exploits until the January 3rd announcement by Google, then the 90% exploitation point would fall on February 12.

Since PCI DSS gives retailers 30 days from a patch release, IT managers’ patching window (from a compliance standpoint) didn’t change even though the probability of exploit had increased considerably. We could also add the patch fixes and re-releases to account for side effects that caused some machines to get the blue screen of death (BSOD) and fail, other machines to constantly reboot or experience significant performance degradations. As patch managers wait for the patches to stabilize, each of these issues moved the recommended patch window out, from a compliance standpoint, without actually changing the 90% exploitation probability date.

Not All Patches are Created Equal — Looking Ahead

If you hope to manage systems from a risk perspective rather than just from a compliance perspective, then it’s necessary to understand the background of every patch release and become knowledgeable about your software vendors’ process for developing patches. Without this critical information, it’s not possible to fully assess the probability of exploitation for systems in use and prioritize actions for managing vulnerabilities that may exist.

In addition, it’s our recommendation that if you believe a patch was not properly released or that you’re lacking critical information behind the preparation of a patch, you should communicate with your software vendor directly and on a regular basis until the patch has been properly applied.

Finally, we must remember that the creation and release of a patch only represents half the battle. In Part 2 of this post, we’ll take a closer look at patch selection and patch testing processes that software owners should go through prior to patching their own systems. In addition, we will discuss tools and methods for applying patches to Linux distributions.